Search

- 02/11/2025

Breaking the Bottleneck: The Dual Challenge of High-Performance Computing Systems

In today's rapidly advancing technological landscape, High-Performance Computing (HPC) is pushing the boundaries across various domains with its immense computational power. HPC executes complex computational tasks using supercomputers or computer clusters comprising thousands of processor cores. These tasks often involve massive data inputs and demand substantial processing power and high-speed data handling capabilities. USI strategically targets the high utilization of AI within this competitive market, leveraging its core strength in 3D packaging technology for various HPC modules. This article will explore the most critical aspects of HPC development, discussing both the challenges and innovations, through an introduction to HPC.

Fig.1 USI 3D Packaging Technology

Introduction to High-Performance Computing (HPC)

HPC encompasses the theory, methods, technologies, and applications of parallel computing realized through supercomputers. Continuous innovation in processor, memory, and storage technologies provides robust computational resources for HPC systems. Modern processors utilize multi-core designs, enabling higher parallel processing capabilities. Advancements in memory and storage technologies, such as DDR5 memory and PCIe 4.0 storage, offer increased data transfer speeds and larger capacities, addressing the high bandwidth and low latency requirements of HPC systems.

HPC finds broad applications in scientific research, weather forecasting, simulations, and more. This diverse applicability allows various fields to benefit from its powerful computational capabilities. However, despite significant progress, numerous challenges remain in designing and deploying HPC systems.

Power Delivery Challenges in High-Performance Computing (HPC)

High-Performance Computing systems face significant power consumption and thermal management challenges. These systems, typically composed of numerous processors, each consuming substantial power, necessitate minimizing energy loss as a primary objective. Traditionally, high-voltage power delivery is employed to reduce energy loss, followed by multi-stage voltage conversion to lower voltages before reaching the microprocessor. In this process, the path routing loss from the DC-DC converter to the microprocessor plays a crucial role. These converters are responsible for stepping down the 12V or 48V DC bus voltage to the specific voltage required by the processor core while simultaneously increasing the current to the necessary level.

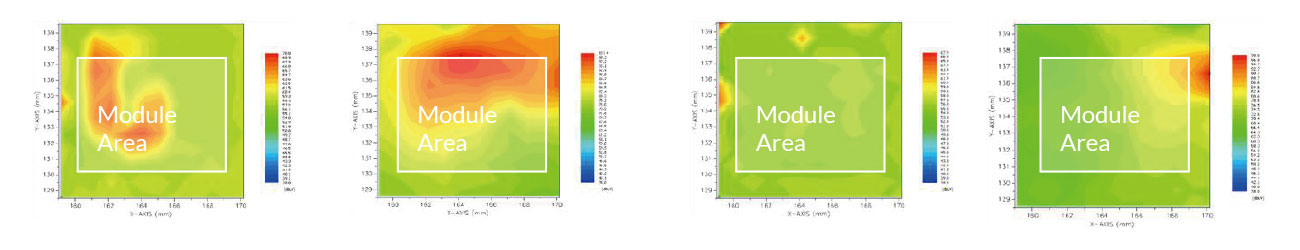

Fig.2 Power loss and heat dissipation are important factors in HPC systems

Losses and Impact of Multi-Stage Power Delivery Networks (PDNs)

Even when a voltage regulator supplies power to a chip over a short distance, multi-stage Power Delivery Networks (PDNs) still experience losses (I²R) due to resistance on the power rails, leading to thermal issues. These losses also encompass effects from inductance and capacitance. Consequently, one of the most critical factors in power delivery design is the placement of the regulator on the PCB, which directly impacts the resistance of the power rails feeding the processor pins.

To minimize resistance by positioning the regulator as close as possible to the Point-of-Load (POL), vertically integrated Voltage Regulator Modules (VRMs) have become a popular solution. These modules integrate the power stage responsible for voltage conversion, magnetic components managing current and heat within the module, and capacitors regulating power before it reaches the processor, all within a single package. This allows the VRM to be placed physically closer to the POL, effectively reducing current loss and power dissipation.

Voltage Regulator Modules (VRMs) & Vertical Power Delivery (VPD)

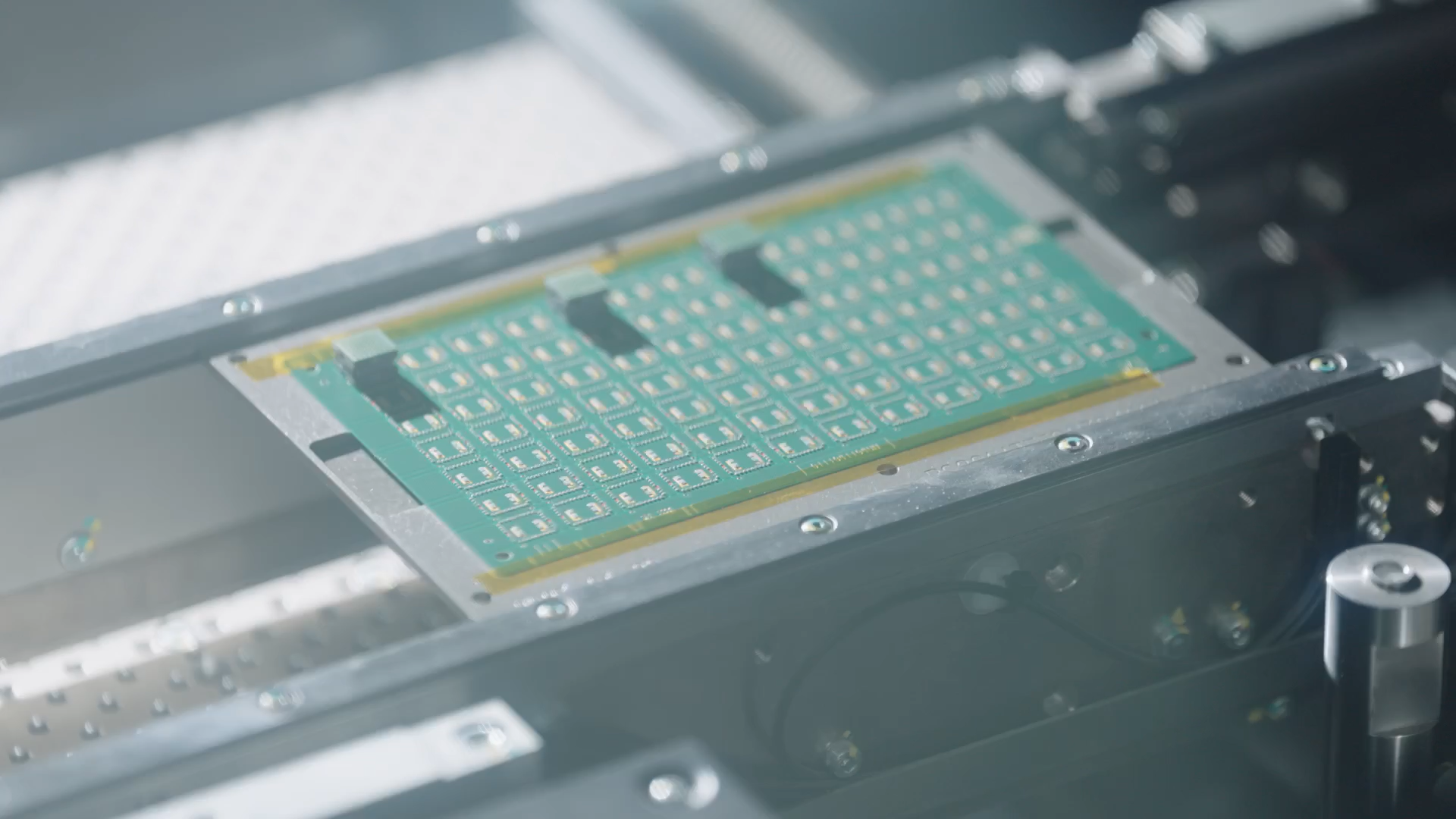

A VRM primarily comprises three parts: capacitors, inductors, and a power stage. For example, a dual-phase power module integrates the components of two phases of a multi-phase buck regulator onto a single substrate, forming a single device. These are then deployed in an array to create a multi-phase system. With the continuous performance and power demands of AI accelerators, the number of power stages may further increase.

Beyond component integration and performance enhancement, a new trend in powering AI accelerators is Vertical Power Delivery. Vertical Power Delivery (VPD) technology moves the voltage regulators directly beneath the processor on the back of the PCB. By transmitting power through a shorter vertical path, VPD significantly reduces PDN resistance and losses, achieving higher power efficiency at higher currents and lower processor core voltages. Furthermore, by eliminating PCB space occupancy, VPD allows AI processor designers to increase memory and I/O routing, further enhancing processing performance.

Both solutions offer distinct advantages and disadvantages. Therefore, implementing the solution best suited to the customer's specific needs is paramount. Designing and deploying high-performance computing systems, and effectively managing and optimizing power consumption, thermal management, and resource allocation, are essential for achieving efficient computing power.

Keep up with top trending topic

For the latest innovation technology, application

and industry insight.

Subscribe Our Blog

For the latest innovation technology, application

and industry insight.